Projects List

- Soft nonholonomic constraints: Theory and applications to optimal control

- Design and Modeling of a Novel Single-Actuator Differentially Driven Robot

- Depth estimation from edge and blur estimation

- Occlusion detection & handling in monocular SLAM

- Autonomous Underwater Vehicle for Monitoring of Maritime Pollution

- Teleoperation of UAV with Haptic Feedback

- Advanced Control Strategies for Unmanned Aerial Vehicles

- Ground Vehicles Driver Assistance and Active Safety Control Systems

- Pedestrian Detection

- Towards Fully Autonomous Self-Supervised Free Space Estimation

- Object-Oriented Structure from Motion

- Humanoid Fall Avoidance

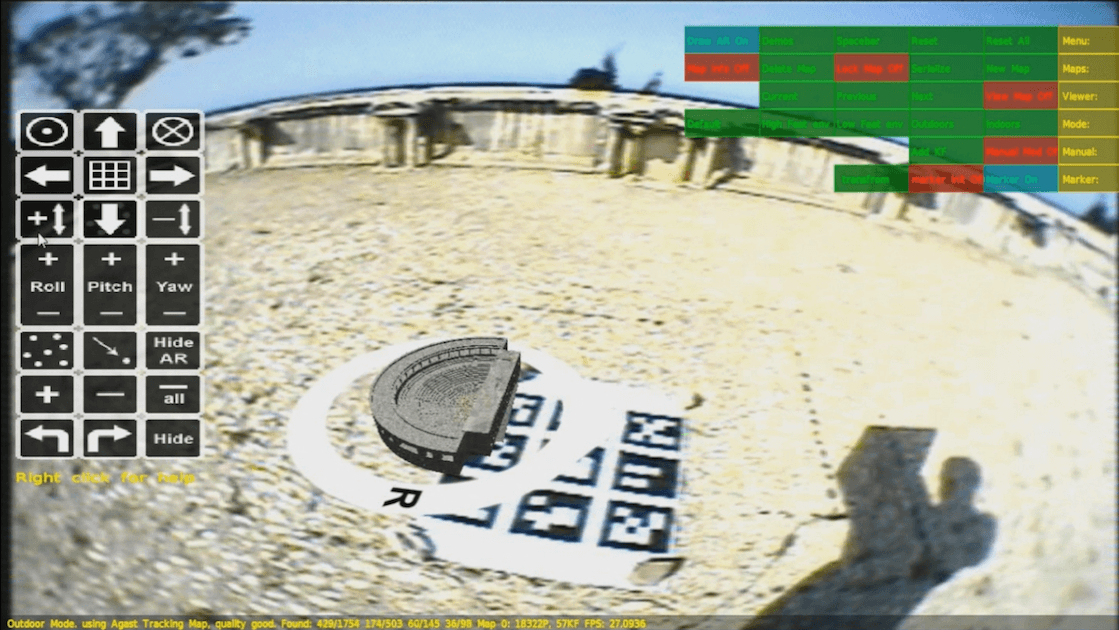

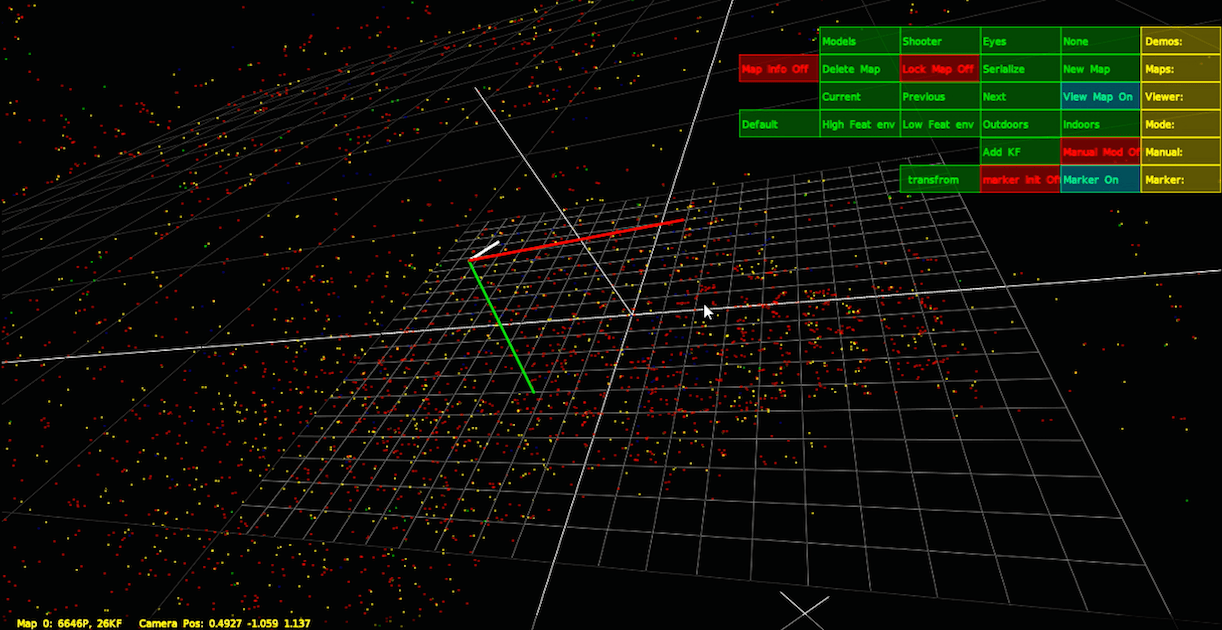

Occlusion detection & handling in monocular SLAM

Real time monocular SLAM has been a problem under study around a decade now; with its first breakthrough with the introduction of filter based techniques(scenelib in 2004).

back then the tracked map was nothing but a dozen of manually selected features that didn’t infer much information regarding the tracked scene.

Released in 2007, PTAM layed the ground work for SFM based techniques to be used as a successful monocular slam solution allowing extraction and tracking of thousands

of features in realtime. But, still, the tracked features are nothing more than a point cloud that cannot be interpreted of any meaning for describing the scene’s geometry.

With the introduction of image based techniques, DTAM was able to achieve pixel based tracking and mapping allowing a dense depth map of the scene to be created in realtime.

But this technique requires a state of the art GPU to be able to process its immense computational cost.

Our work is based on PTAM, i.e. sparse sfm based technique that allows real time tracking and mapping of a scene based on a sparse set of extracted features.

the contribution of our work to ptam is a bottom-up approach for surface estimation of the scene; knowledge of the scene allows us to track the camera’s pose within the scene

and allows us to estimate occluded regions from certain point of views so that they are properly handled.

By: Georges Younes