Projects List

- Soft nonholonomic constraints: Theory and applications to optimal control

- Design and Modeling of a Novel Single-Actuator Differentially Driven Robot

- Depth estimation from edge and blur estimation

- Occlusion detection & handling in monocular SLAM

- Autonomous Underwater Vehicle for Monitoring of Maritime Pollution

- Teleoperation of UAV with Haptic Feedback

- Advanced Control Strategies for Unmanned Aerial Vehicles

- Ground Vehicles Driver Assistance and Active Safety Control Systems

- Pedestrian Detection

- Towards Fully Autonomous Self-Supervised Free Space Estimation

- Object-Oriented Structure from Motion

- Humanoid Fall Avoidance

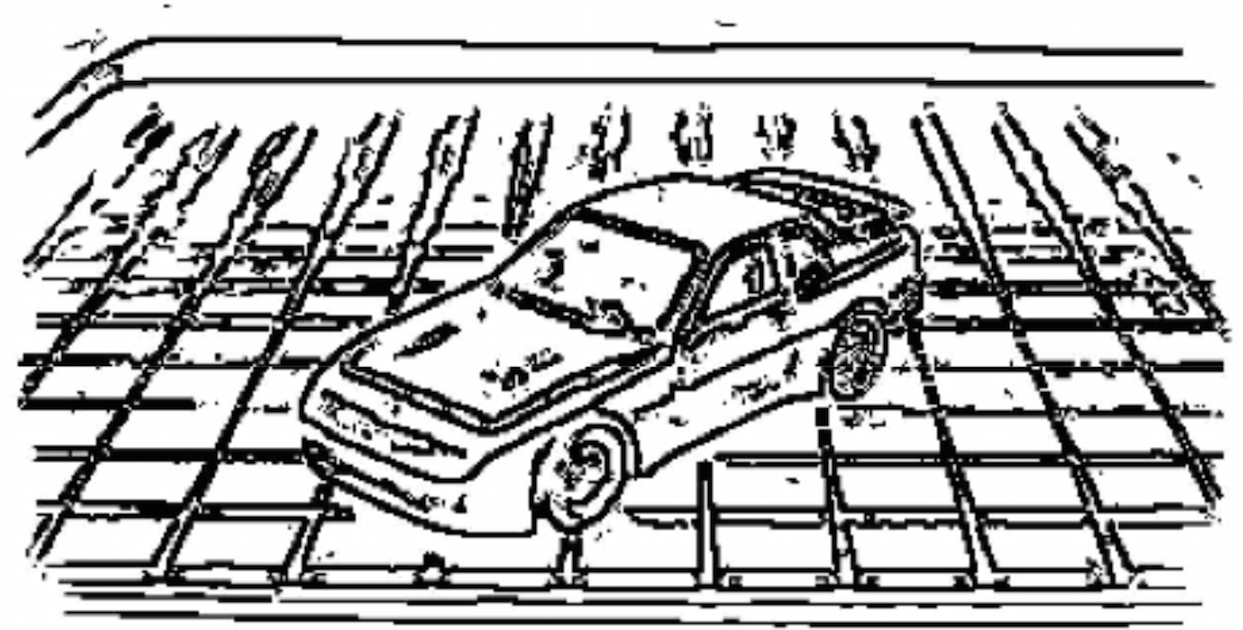

Depth estimation from edge and blur estimation

The standard approach to edge detection is based on a model of edges as large step changes in intensity. This approach fails to reliably detect and localize edges in natural images where blur scale and contrast can vary over a broad range. The main problem is that the appropriate spatial scale for local estimation depends upon the local structure of the edge, and thus varies unpredictably over the image. Here we show how we can estimate the depth or distances of objects in a scene based on images formed by lenses using the proposed edge detection method. The recovery is based on measuring the change in the scene’s image due to a known change in the three intrinsic camera parameters: (i) distance between the lens and the image detector, (ii) focal length of the lens, and (iii) diameter of the lens aperture. We show that edges spanning a broad range of blur scales and contrasts can be recovered accurately by a single system with no input parameters other than the second moment of the sensor noise. A natural dividend of this approach is a measure of the thickness of contours which can be used to estimate focal and penumbral blur. Local scale control is shown to be important for the estimation of blur in complex images, where the potential for interference between nearby edges of very different blur scale requires that estimates be made at the minimum reliable scale.

By: Hussein Jlailaty