We are pleased to announce that we have 3 papers accepted to The Sixth Arabic Natural Language Processing Workshop (WANLP 2021) co-located with EACL 2021. Authored by our talented team members: Tarek Naous, Wissam Antoun, Reem Mahmoud, Fady Baly under the supervision of Prof. Hazem Hajj. The papers target Arabic empathetic conversational agents, generative language models, and language understanding models.

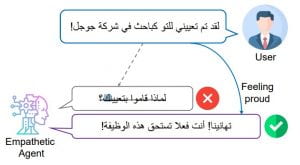

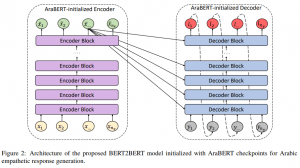

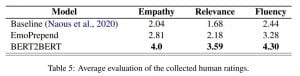

Empathetic BERT2BERT Conversational Model:

Learning Arabic Language Generation with Little Data

Our latest contribution to Arabic Conversational AI leverages knowledge transfer from AraBERT in a BERT2BERT architecture. We address the low resource challenges and achieve sota results in open domain empathetic response generation.

Paper: https://arxiv.org/abs/2103.04353

Abstract: Enabling empathetic behavior in Arabic dialogue agents is an important aspect of building human-like conversational models. While Arabic Natural Language Processing has seen significant advances in Natural Language Understanding (NLU) with language models such as AraBERT, Natural Language Generation (NLG) remains a challenge. The shortcomings of NLG encoder-decoder models are primarily due to the lack of Arabic datasets suitable to train NLG models such as conversational agents. To overcome this issue, we propose a transformer-based encoder-decoder initialized with AraBERT parameters. By initializing the weights of the encoder and decoder with AraBERT pre-trained weights, our model was able to leverage knowledge transfer and boost performance in response generation. To enable empathy in our conversational model, we train it using the ArabicEmpatheticDialogues dataset and achieve high performance in empathetic response generation. Specifically, our model achieved a low perplexity value of 17.0 and an increase in 5 BLEU points compared to the previous state-of-the-art model. Also, our proposed model was rated highly by 85 human evaluators, validating its high capability in exhibiting empathy while generating relevant and fluent responses in open-domain settings.

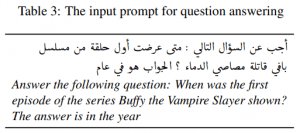

AraGPT2:

Pre-Trained Transformer for Arabic Language Generation

AraGPT2 is a 1.5B transformer model, the largest for Arabic, trained on 77GB of text for 9 days with a TPUv3-128. The model can generate news articles that are difficult to distinguish from human-written articles. AraGPT2 shows impressive Zero-shot performance on trivia QA.

Paper: arxiv.org/abs/2012.15520

GitHub: https://github.com/aub-mind/arabert/tree/master/aragpt2

Abstract: Recently, pre-trained transformer-based architectures have proven to be very efficient at language modeling and understanding, given that they are trained on a large enough corpus. Applications in language generation for Arabic are still lagging in comparison to other NLP advances primarily due to the lack of advanced Arabic language generation models. In this paper, we develop the first advanced Arabic language generation model, AraGPT2, trained from scratch on a large Arabic corpus of internet text and news articles. Our largest model, AraGPT2-mega, has 1.46 billion parameters, which makes it the largest Arabic language model available. The Mega model was evaluated and showed success on different tasks including synthetic news generation, and zero-shot question answering. For text generation, our best model achieves a perplexity of 29.8 on held-out Wikipedia articles. A study conducted with human evaluators showed the significant success of AraGPT2-mega in generating news articles that are difficult to distinguish from articles written by humans. We thus develop and release an automatic discriminator model with a 98% percent accuracy in detecting model-generated text. The models are also publicly available, hoping to encourage new research directions and applications for Arabic NLP.

AraELECTRA:

Pre-Training Text Discriminators for Arabic Language Understanding

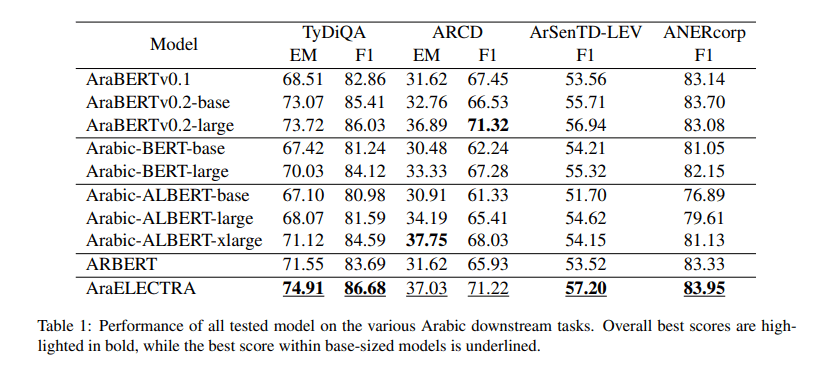

AraELECTRA is our latest advancements in Arabic Language Understanding. The model was trained on 77GB of Arabic text for 24 days. AraELECTRA achieves impressive performance, especially on Question Answering tasks.

Paper: https://arxiv.org/abs/2012.15516

Github: https://github.com/aub-mind/arabert/tree/master/araelectra

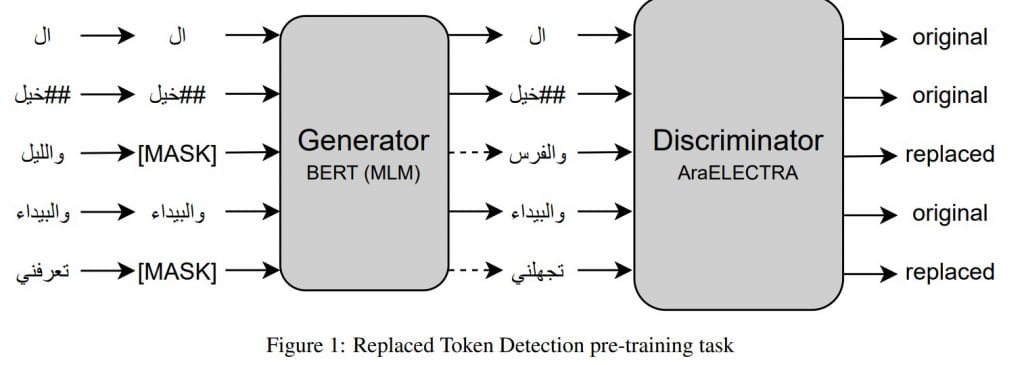

Abstract: Advances in English language representation enabled a more sample-efficient pre-training task by Efficiently Learning an Encoder that Classifies Token Replacements Accurately (ELECTRA). Which, instead of training a model to recover masked tokens, it trains a discriminator model to distinguish true input tokens from corrupted tokens that were replaced by a generator network. On the other hand, current Arabic language representation approaches rely only on pretraining via masked language modeling. In this paper, we develop an Arabic language representation model, which we name AraELECTRA. Our model is pretrained using the replaced token detection objective on large Arabic text corpora. We evaluate our model on multiple Arabic NLP tasks, including reading comprehension, sentiment analysis, and named-entity recognition and we show that AraELECTRA outperforms current state-of-the-art Arabic language representation models, given the same pretraining data and with even a smaller model size.

Acknowledgments:

This research was supported by the University Research Board (URB) at the American University of Beirut (AUB), and by the TFRC program, which we thank for the free access to cloud TPUs. We also thank As-Safir newspaper for the data access.

With Guten Morgen, brighten your day one message at a time. Inspiring thoughts, meaningful insights, and positivity to start your day right! Your dull Wednesday’s can be brighter and beautiful.

Mittwochsgrüße

Top Rated Dentist Near Me in Castle Rock, CO | Invisalign, Sleep Apnea, Dental Implants, and All-on-4 Experts. Call 303-814-9899 now! family dentist near me

You should know that Your article is an inspiration to many people. gain more knowledge from what you write. ดาวน์โหลด sexy baccarat

Downloaded the Bus Simulator Indonesia Mod APK to try it out, and wow! The free fuel and cool skins make a huge difference. If you love driving games, don’t miss it. You can get it at https://simulatorbusapk.com .

Gangaur Realtech is a professionally managed organisation specializing in real estate services where integrated services are provided by professionals to its clients seeking increased value by owning, occupying or investing in real estate. Warmtepompen lucht lucht

I will be interested in more similar topics. i see you got really very useful topics , i will be always checking your blog thanks Zonnepanelen batterij

I would like to thank you for the efforts you have made in writing this article. I am hoping the same best work from you in the future as well. In fact your creative writing abilities has inspired me to start my own Blog Engine blog now. Really the blogging is spreading its wings rapidly. Your write up is a fine example of it. Zonnepanelen installateur Leuven

The next time I read a blog, I hope that it doesnt disappoint me as much as this one. I mean, I know it was my choice to read, but I actually thought you have something interesting to say. All I hear is a bunch of whining about something that you could fix if you werent too busy looking for attention. Thuisbatterij prijs

Interesting post. I Have Been wondering about this issue, so thanks for posting. Pretty cool post.It ‘s really very nice and Useful post.Thanks Zonnepanelen met batterij

I definitely enjoying every little bit of it and I have you bookmarked to check out new stuff you post. Zonnepanelen installateur

I think this is an informative post and it is very useful and knowledgeable. therefore, I would like to thank you for the efforts you have made in writing this article. Thuisbatterij

i am for the first time here. I found this board and I in finding It truly helpful & it helped me out a lot. I hope to present something back and help others such as you helped me. Lucht-lucht warmtepompen

Took me time to read all the comments, but I really enjoyed the article. It proved to be Very helpful to me and I am sure to all the commenters here! It’s always nice when you can not only be informed, but also entertained! Reiki

Admiring the time and effort you put into your blog and detailed information you offer!.. Lucht water warmtepompen

The writer is enthusiastic about purchasing wooden furniture on the web and his exploration about best wooden furniture has brought about the arrangement of this article. Thuisbatterij kopen

I really enjoy reading and also appreciate your work. Zonnepanelen Antwerpen

I think this is an informative post and it is very useful and knowledgeable. therefore, I would like to thank you for the efforts you have made in writing this article. Zonnepanelen Limburg

Thanks for sharing this information. I really like your blog post very much. You have really shared a informative and interesting blog post . Zonnepanelen installateur

I’m excited to uncover this page. I need to to thank you for ones time for this particularly fantastic read!! I definitely really liked every part of it and i also have you saved to fav to look at new information in your site. Zonnnepanelen kopen

mobile app development company usa

Achieve dental perfection with Solis Clinic in Dubai Silicon Oasis. Experts in cosmetic dentistry and full dental care services for every need. smile direct

Your writing style is engaging and accessible, making complex concepts easy to understand. sòng bạc online

I have a mission that I’m just now working on, and I have been at the look out for such information 카지노사이트

Your article has opened my eyes to new possibilities and perspectives.

sòng bạc online

Your dedication to delivering high-quality content is evident in every word you write. game bài

Thanks for this website.smartsquare hmh

Lyfe Elixirs is a premium coffee brand celebrated for its innovative approach to crafting healthy and flavorful Coffee, blends. At the core of its offerings is its signature mushroom coffee, a remarkable blend that combines the rich, aromatic taste of premium coffee beans with the powerful benefits of adaptogenic mushrooms. Known for their natural wellness-enhancing properties, these mushrooms are carefully selected and blended with ethically sourced, high-quality coffee beans to create a beverage that not only satisfies your taste buds but also supports your overall health and well-being.

Designed to provide more than just a caffeine boost, Lyfe Elixirs mushroom coffee is a wholesome and energizing alternative to traditional coffee. Each cup is thoughtfully crafted to promote balance in the body, improve mental clarity, and enhance resilience to stress. The adaptogenic mushrooms work synergistically to support your immune system, while the smooth and robust flavor of the coffee beans ensures a satisfying and indulgent experience with every sip.

Perfect for health-conscious individuals, Lyfe Elixirs coffee fits seamlessly into a busy lifestyle, offering a nutritious way to stay energized throughout the day. Whether you’re starting your morning with focus and intention, powering through a demanding afternoon, or simply seeking a healthier coffee option, Lyfe Elixirs provides a functional and delicious solution.

More than just a coffee brand, Lyfe Elixirs is a commitment to wellness, combining taste, nutrition, and vitality in every blend. Discover how this innovative coffee can elevate your daily routine and bring balance to your life—one flavorful sip at a time.

Find your dream mattress at Mattress Now in Garner. Their extensive inventory and friendly staff make shopping a breeze. Nectar medium firm mattress

I am inspired by your commitment to excellence and continuous improvement.

789bet

Just pure classic stuff from you here. I have never seen such a brilliantly written article in a long time. I am thankful to you that you produced this! Also, please take time to check out some Security Guard Services in Riverside. 메이저놀이터

Your article has left a lasting impression on me, and I am grateful for the opportunity to learn from your work. 789bet

I value the expertise and wisdom you bring to the table.

web cờ bạc online

I believe this is an insightful and useful post. As a result, I’d like to express my appreciation for the time and attention you put into producing this essay. This is a really excellent and useful post. Thanks fairfax assault lawyer An accomplished lawyer with a focus on criminal defense, especially when assault accusations are involved, is a Fairfax assault lawyer. Assault is a serious crime with harsh punishments that include jail time, fines, and a criminal record. A number of offenses, including simple assault, aggravated assault, domestic violence, and assault and battery, can be classified as assault in Virginia. An attorney who has achieved success in instances similar to yours will instill confidence in your defense.

The Receiptify process is straightforward, ensuring users can quickly access their accounts and start exploring their data. For those interested in other platforms, there’s also the Receiptify Apple Music login option.

Diamond Pest Control is the only company I trust for pest control in London. They are thorough, professional, and very efficient. Diamond Pest Control

This post is helpful and enlightening. I therefore want to thank you for the time and effort you invested in writing this essay. This is a very good and helpful post. fairfax sexual assault lawyer A Fairfax sexual assault attorney has handled cases similar to yours will be better able to handle the intricacies of the court system and the delicate nature of these accusations. A proficient defender is frequently indicated by a lawyer who has a solid track record of success in sexual assault cases.

Great post I would like to thank you for the efforts you have made in writing this interesting and knowledgeable article. Play dough made from organic ingredients

Lyfe Marketplace offers a thoughtfully curated selection of premium, health-focused products designed to enhance your lifestyle and well-being. Our collection features non-alcoholic beverages that provide sophisticated alternatives for social occasions, allowing you to enjoy gatherings without compromising on health. We also offer a range of health and wellness essentials, including functional foods and supplements that boost energy, improve mental clarity, and promote relaxation. For your daily rituals, our coffee and tea blends are infused with adaptogens and functional ingredients, providing nourishment and balance. Additionally, our innovative non-alcoholic cocktail alternatives deliver flavorful and enjoyable options for social experiences. To complement a wellness-centered lifestyle, we provide pantry staples that are vegan, organic, and sustainably sourced. At Lyfe Marketplace, every product is carefully selected for its quality and health benefits, ensuring you can seamlessly combine wellness with enjoyment in every aspect of your life.

That was an excellent post wiki

Our premium 2-gram carts are perfect for those seeking a longer-lasting, flavorful vaping experience. With smooth hits and a variety of flavor profiles, you’ll enjoy your sessions like never before. 2 gram carts

In India, mobile gaming has exploded in popularity, not just for entertainment but also as a means to earn money. One standout in this growing trend is the 55 Ace Game, a platform where players can enjoy a variety of exciting games and win real cash prizes. If you’re looking for a fun and rewarding way to make money from your gaming skills, 55 Ace Game could be the perfect match. https://55acegame.org/

55 Ace Game is an online gaming app available on Android that brings together popular card games, board games, and casual games, offering you the chance to win money while playing. Whether you enjoy a game of strategy or prefer luck-based fun, 55 Ace Game has something for everyone. The app provides players with a platform to test their gaming skills in real competitions, where cash rewards await. https://55acegame.net/

Leveraging Technology for Efficient GEPCO Online Bill Management: Insights from WANLP 2021 on Conversational Agents and Pre-Trained Language Models

At the WANLP 2021 conference, discussions about Arabic empathetic conversational agents and pre-trained language models highlighted the growing role of AI in enhancing user experiences. These advancements in natural language processing (NLP) have practical applications beyond just chatbots—they can be integrated into everyday services, such as managing your GEPCO online bill. By drawing inspiration from these technologies, GEPCO can offer a more efficient, personalized, and accessible experience for its customers.

A traffic ticket doesn’t have to ruin your record. Contact New York traffic ticket lawyers to fight for reduced fines, fewer points, and license protection. Experienced attorneys make all the difference in achieving favorable outcomes! Best Traffic Lawyers in Bronx

I appreciate the time and effort you invested in crafting such a thought-provoking piece.

bài cào

best serum for glowing skin in pakistan jcnkcnkcd

Gain the media intelligence that is necessary for daily decision making by staying on top of what is being reported by the media. Media Monitors Pakistan is … media monitors

Stay ahead of the curve with The Investors Centre’s recommendations for the best investment apps in the UK. User-friendly and feature-rich, these apps make investing a seamless experience. click here

Your site has made a big difference for me – thank you! หวย ลาว สมัคร

Buy Essay Online UK is the go-to service for students seeking professional academic assistance. Offering high-quality essays tailored to meet your requirements, this platform ensures timely delivery and plagiarism-free work. Whether you’re struggling with complex topics or tight deadlines, Buy Essay Online UK provides expert writers who are well-versed in various academic fields. Their user-friendly interface, secure payment options, and responsive customer support make the process seamless. Students can boost their academic performance with well-researched and expertly written essays. Choose Buy Essay Online UK to save time and achieve excellence in your studies. Your academic success is just a click away.