The use of social media platforms has become more prevalent, which has provided tremendous opportunities for people to connect but has also opened the door for misuse with the spread of hate speech and offensive language. This phenomenon has been driving more and more people to more extreme reactions and online aggression, sometimes causing physical harm to individuals or groups of people. There is a need to control and prevent such misuse of online social media through automatic detection of profane language.

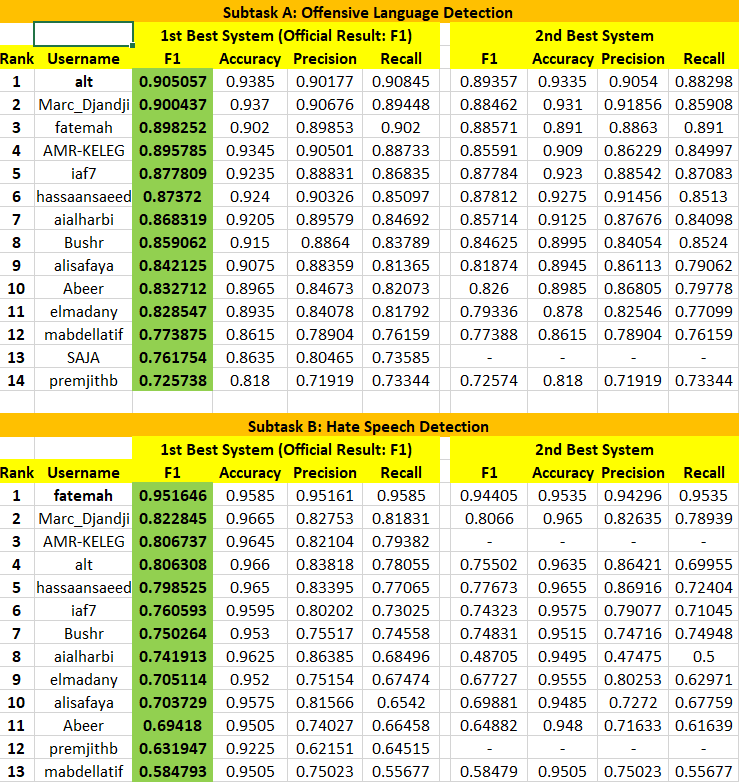

The shared task on Offensive Language Detection at the OSACT4 has aimed at achieving state of art profane language detection methods for Arabic social media. Our team “BERTologists” tackled this problem by leveraging state of the art pretrained Arabic language model, AraBERT, that we augment with the addition of Multi-task learning to enable our model to learn efficiently from little data. Our Multitask AraBERT approach achieved second place in both subtasks A & B, which shows that the model performs consistently across different tasks.

Team Members: Marc Djandji, Fady Baly, Wissam Antoun

Paper: https://www.aclweb.org/anthology/2020.osact-1.16/

Model Code: https://github.com/aub-mind/arabert/blob/master/examples/MTL_AraBERT_Offensive_Lanaguage_detection.ipynb

OSACT4 is The 4th Workshop on Open-Source Arabic Corpora and Processing Tools with Shared Task on Offensive Language Detection held in Marseille, France. 12th May 2020. Co-located with LREC 2020 http://edinburghnlp.inf.ed.ac.uk/workshops/OSACT4/

How did you write these articles? It’s interesting and special in it. It really helped my knowledge a lot. ติดต่อ all bet

I look forward to reading more of your work in the future. hoa hồng affiliate

I am grateful for the opportunity to learn from someone as knowledgeable as you.ติดต่อสอบถาม

Your article is a testament to your expertise and dedication to your craft. cách kiếm tiền online

Your article has broadened my understanding and challenged my preconceptions.ใลน์หวยบี